Abstract

In the realm of car damage detection, Convolutional Neural Networks (CNNs) have established themselves as a powerful tool. However, their ability to pinpoint specific regions of damage within an image can be limited. To address this challenge, a Convolutional Attention Module (Cam) can be integrated into the CNN architecture. This module leverages a dual-pronged attention mechanism, employing both channel attention and spatial attention in parallel. Channel attention refines the model's focus by discerning the significance of various feature channels extracted by the CNN, emphasizing those that hold the most weight in damage detection. Conversely, spatial attention concentrates on the importance of specific locations within the feature maps, directing the model's gaze towards image regions with a high likelihood of damage. By incorporating these complementary attention mechanisms, the Cam module empowers the CNN to exhibit a more nuanced understanding of damaged areas in car images. This ultimately translates to enhanced accuracy in the crucial task of car damage detection.

Model

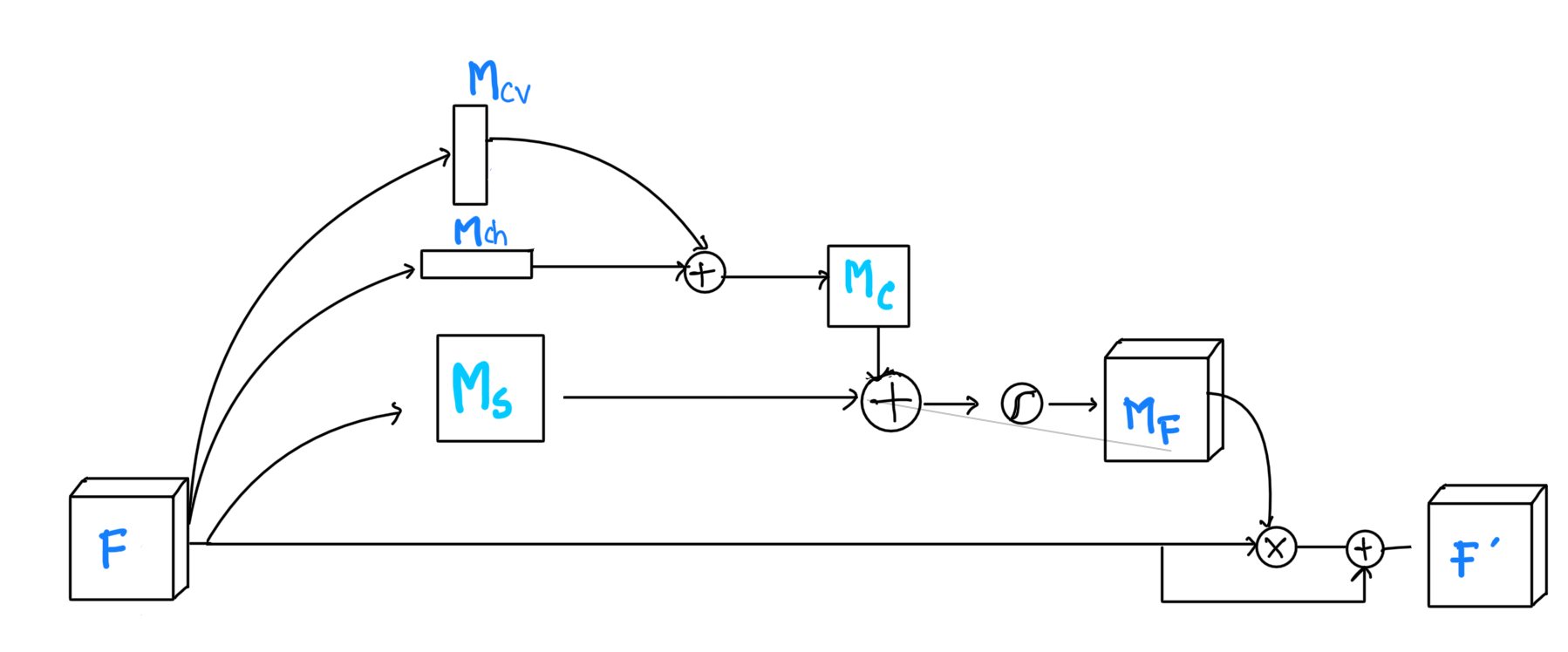

The proposed CarDNet model includes two main parts: ResNet50 and an enhanced version of the attention net module. The key innovation is a refined attention module known as CAM (Convolutional Attention Module), aimed at better detecting damage between different classes. CAM consists of two parallel sub-modules: one for channels and one for spatial features. Placed after each residual block in the ResNet50 network, the CAM module dynamically enhances each incoming intermediate feature.:

The architecture of carDNet.