Abstract

Accurate and early detection of brain tumors is essential for effective treatment planning in medical diagnosis. However, deep learning (DL) models often struggle with MRI-based tumor detection due to significant variability in tumor size, shape, and location. Traditional diagnostic techniques are limited by subjectivity and low interpretability, while many DL models operate as black boxes, reducing clinical trust. Incorporating attention mechanisms can help by directing the model’s focus to the most informative regions of an image, thus improving both accuracy and interpretability. However, existing attention methods often fail to capture the complex spatial and contextual features present in medical images such as MRI scans. In this study, we propose a novel attention-based, explainable DL framework designed to improve the performance and transparency of brain tumor diagnosis. We introduce the Strip-Style Pooling Attention Network (SSPANet), which combines the strengths of channel and spatial attention mechanisms to more effectively capture intricate imaging features. We evaluated SSPANet using VGG16 and ResNet50 as backbone architectures, integrating it alongside existing attention methods for comparison. Among all configurations, ResNet50 combined with SSPANet achieves the best results, with 97% accuracy, precision, recall, and F1-score, along with 95% Cohen’s Kappa and Matthews Correlation Coefficient. For interpretability, we employ GradCAM, GradCAM++, and EigenGradCAM across attention-guided DL models. The ResNet50 + SSPANet + GradCAM++ combination consistently provides superior visual explanations, highlighting SSPANet’s ability to capture complex spatial-contextual information effectively. We also offer a theoretical analysis to support the efficiency and effectiveness of the proposed attention mechanism.

Model

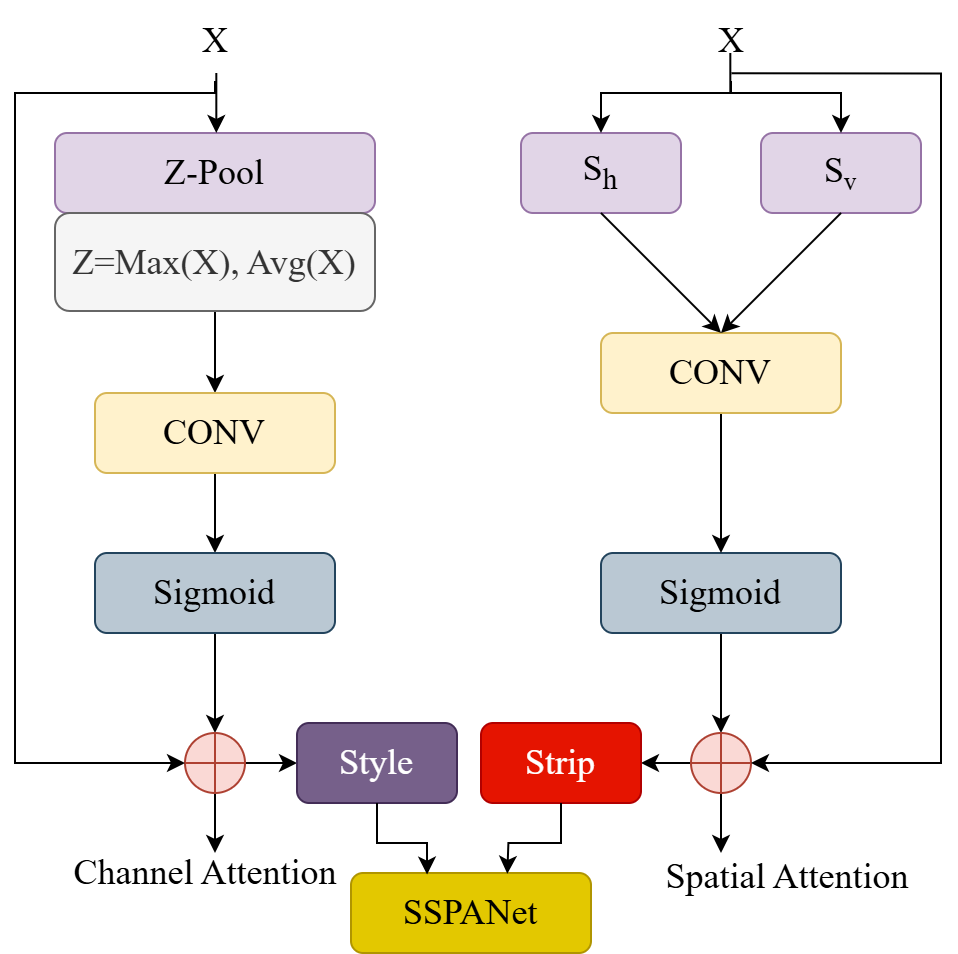

The Strip-Style Pooling Attention Network (SSPANet) is a hybrid attention mechanism that enhances convolutional neural networks by jointly modelling channel importance, spatial context, and textural variations. Its channel attention branch uses Z-pooling, which combines average and max pooling to generate richer activation statistics for robust feature weighting. The spatial attention branch incorporates strip pooling, capturing long-range dependencies along horizontal and vertical directions, while style pooling extracts second-order statistics, such as mean and standard deviation, to encode fine-grained texture information often overlooked by conventional modules. These outputs are fused in a lightweight parallel structure and applied sequentially to recalibrate channels and refine spatial focus. This unified design allows SSPANet to emphasise discriminative tumour regions, improve representational power, and provide consistent performance across deep learning backbones.

Structural diagram of proposed SSPANet.